In today’s data-driven world, efficiently processing large volumes of data is crucial for business success. That’s where batch jobs step in—playing a vital role in automating repetitive, resource-intensive tasks behind the scenes. Whether you’re generating reports, migrating data, or integrating systems, batch processing is the silent engine driving reliability and scalability in enterprise applications.

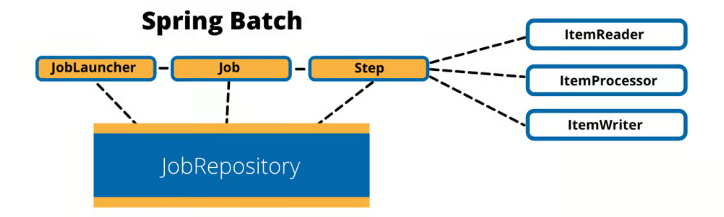

As a Java backend engineer, I’ve seen how Spring Boot and Spring Batch have transformed the way we design and manage batch jobs. With robust scheduling, transaction management, and fault tolerance, Spring’s ecosystem empowers us to handle complex workflows with confidence. The ability to monitor, restart, and scale jobs seamlessly ensures that critical business processes run smoothly, even as data volumes grow.

But the real magic happens when we combine batch jobs with modern DevOps practices—integrating them into CI/CD pipelines, leveraging cloud-native tools, and orchestrating jobs across distributed systems. This synergy not only boosts productivity but also enhances system resilience and agility.

I’m curious:

- How are you leveraging batch jobs in your projects?

- What challenges have you faced, and what solutions worked best for you?

- Are you exploring new patterns or technologies to optimize batch processing?

Let’s share experiences and insights! Drop your thoughts or questions in the comments below. 👇

#Java #SpringBoot #SpringBatch #BackendDevelopment #BatchJobs #Microservices #CloudNative #DevOps #DataProcessing #SoftwareEngineering #TechCommunity